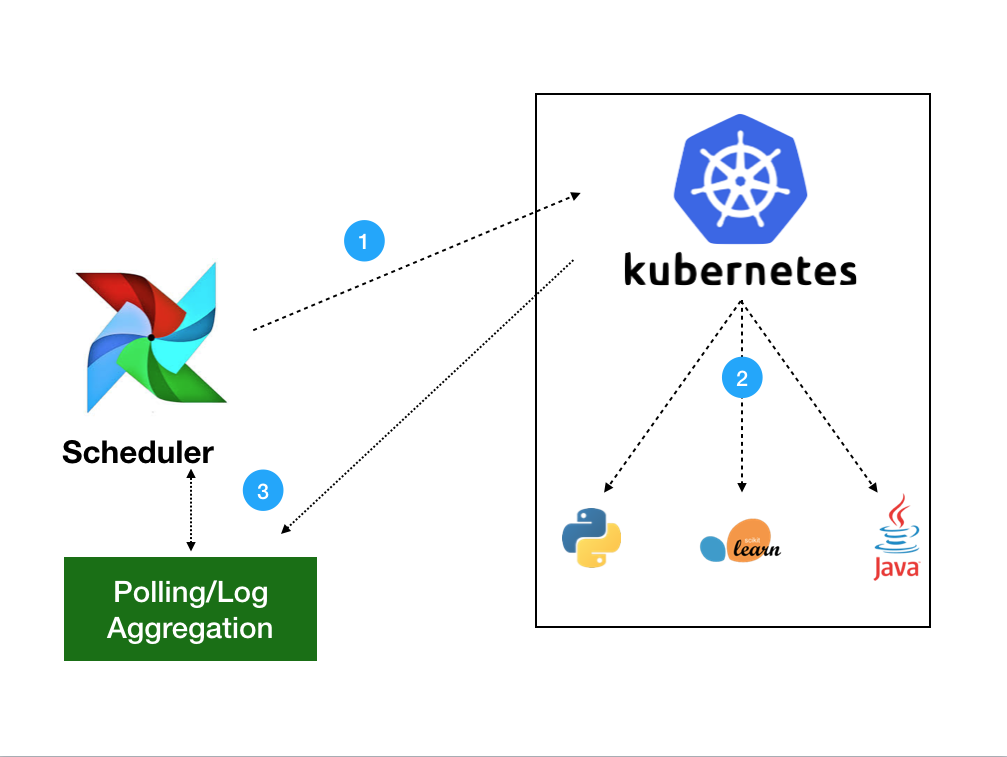

When combined, the capabilities of DataRobot MLOps and Apache Airflow provide a reliable solution for retraining and redeploying your models.

#Data apache airflow insight software

Thorsten Weber, bbv Software Services AG By far the best resource for Airflow. Integrate DataRobot and Apache Airflow for Retraining and Redeploying Models. An easy-to-follow exploration of the benefits of orchestrating your data pipeline jobs with Airflow.ĭaniel Lamblin, Coupang The one reference you need to create, author, schedule, and monitor workflows with Apache Airflow. about the authorīas Harenslak and Julian de Ruiter are data engineers with extensive experience using Airflow to develop pipelines for major companies. Often people seem to regard this as a complex solution, but it’s effectively like cloud functions for distributed data processing just provide your code, and it will run and scale the service. Set up Airflow in production environmentsįor DevOps, data engineers, machine learning engineers, and sysadmins with intermediate Python skills. Apache Airflow is an open-source workflow management platform for data engineering pipelines. To summarise dataflow: Apache Beam is a framework for developing distributed data processing, and google offers a managed service called dataflow.

#Data apache airflow insight how to

Part reference and part tutorial, this practical guide covers every aspect of the directed acyclic graphs (DAGs) that power Airflow, and how to customize them for your pipeline’s needs. In this session, Michael Collado from Datakin will show how to trace data lineage and useful operational metadata in Apache Spark and Airflow pipelines, and talk about how OpenLineage fits in the context of data pipeline operations and provides insight into the larger data ecosystem. You’ll explore the most common usage patterns, including aggregating multiple data sources, connecting to and from data lakes, and cloud deployment. about the bookĭata Pipelines with Apache Airflow teaches you how to build and maintain effective data pipelines. Its easy-to-use UI, plug-and-play options, and flexible Python scripting make Airflow perfect for any data management task. Apache Airflow provides a single platform you can use to design, implement, monitor, and maintain your pipelines. about the technologyĭata pipelines manage the flow of data from initial collection through consolidation, cleaning, analysis, visualization, and more. Using real-world scenarios and examples, Data Pipelines with Apache Airflow teaches you how to simplify and automate data pipelines, reduce operational overhead, and smoothly integrate all the technologies in your stack. Apache Airflow provides a single customizable environment for building and managing data pipelines, eliminating the need for a hodgepodge collection of tools, snowflake code, and homegrown processes.

Useful for all kinds of users, from novice to expert.Ī successful pipeline moves data efficiently, minimizing pauses and blockages between tasks, keeping every process along the way operational. Increase reliability of your workflows through easy-to-use charts for monitoring and troubleshooting the root cause of an issue.Ĭloud Composer's managed nature allows you to focus on authoring, scheduling, and monitoring your workflows as opposed to provisioning resources.ĭuring environment creation, Cloud Composer provides the following configuration options: Cloud Composer environment with a route-based GKE cluster (default), Private IP Cloud Composer environment, Cloud Composer environment with a VPC Native GKE cluster using alias IP addresses, Shared VPC.Video description An Airflow bible. Leverage existing Python skills to dynamically author and schedule workflows within Cloud Composer.

0 kommentar(er)

0 kommentar(er)